Google has introduced Nested Learning, a novel machine learning approach designed to address the challenge of catastrophic forgetting in continual learning. Presented in their paper at NeurIPS 2025, Nested Learning reframes a single model as a system of interconnected, multi-level optimization problems optimized simultaneously. This paradigm bridges the gap between model architecture and the optimization algorithm by treating them as fundamentally the same concepts – different levels of optimization with unique context flow and update rates – ultimately enabling deeper computational depth and improved long-context memory management.

Nested Learning: A New Machine Learning Paradigm

Nested Learning presents a new machine learning paradigm by viewing models as interconnected, multi-level optimization problems. This approach bridges the gap between a model’s architecture and its training algorithm, recognizing them as different “levels” of optimization. By acknowledging this inherent structure, Nested Learning introduces a previously unseen dimension for AI design, enabling the creation of learning components with increased computational depth – ultimately addressing issues like catastrophic forgetting.

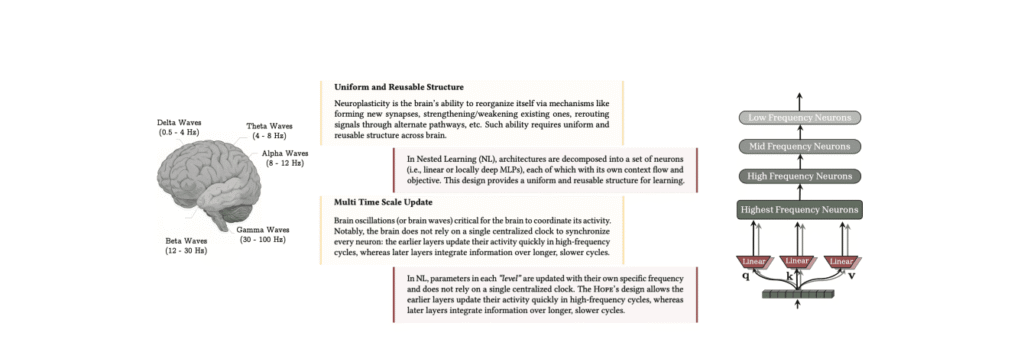

The core of Nested Learning lies in recognizing that complex models are actually sets of nested or parallel optimization problems, each with its own “context flow” – a distinct set of information for learning. This perspective suggests current deep learning methods compress these internal context flows. Furthermore, the source highlights that concepts like backpropagation and attention mechanisms can be formalized as simple associative memory modules, revealing a uniform and reusable structure for model design.

As a proof-of-concept, the “Hope” architecture was developed using Nested Learning principles. Hope is a self-modifying recurrent architecture augmented with “continuum memory systems” (CMS) – a spectrum of memory modules updating at different frequencies. Experiments demonstrate Hope achieves lower perplexity and higher accuracy on language modeling and common-sense reasoning tasks compared to standard recurrent models and transformers, validating the power of the Nested Learning paradigm.

Addressing Catastrophic Forgetting in Machine Learning

Nested Learning offers a new approach to machine learning designed to address “catastrophic forgetting,” where learning new tasks diminishes performance on older ones. This paradigm views a single model not as one process, but as a system of interconnected, multi-level optimization problems. Recognizing that model architecture and training rules are fundamentally the same, Nested Learning allows for building learning components with deeper computational depth – a key to mitigating forgetting and enabling continual learning, mirroring the human brain’s neuroplasticity.

The core of Nested Learning lies in defining update frequency rates for each interconnected optimization problem, ordering them into levels. This framework extends the concept of memory in models like Transformers, creating a “continuum memory system” (CMS). CMS sees memory as a spectrum of modules, each updating at a specific rate, enabling a richer and more effective system for continual learning compared to standard approaches with limited update levels.

As a proof-of-concept, researchers designed “Hope,” a self-modifying architecture leveraging Nested Learning and CMS blocks. Experiments demonstrate Hope’s superior performance in language modeling and long-context reasoning, achieving lower perplexity and higher accuracy than modern recurrent models and standard transformers. This validates the power of Nested Learning and its potential to build more capable AI systems that can continually learn without forgetting.

We believe the Nested Learning paradigm offers a robust foundation for closing the gap between the limited, forgetting nature of current LLMs and the remarkable continual learning abilities of the human brain.

The Nested Learning Paradigm and Model Design

Nested Learning views machine learning models not as single processes, but as interconnected, multi-level optimization problems working simultaneously. This paradigm recognizes that a model’s architecture and its training rules are fundamentally the same—different “levels” of optimization, each with its own “context flow” and update rate. By understanding this inherent structure, Nested Learning enables the design of AI components with greater computational depth, addressing issues like catastrophic forgetting by allowing for multi-time–scale updates.

The Nested Learning approach reveals that existing deep learning methods essentially compress internal context flows. Researchers found they could model the training process—specifically backpropagation—as an associative memory, mapping data points to local error values. Key architectural components like the attention mechanism in transformers can also be formalized as simple associative memory modules. This allows for the creation of a “continuum memory system” (CMS) where memory is a spectrum of modules, each updating at a unique frequency.

As a proof-of-concept, the “Hope” architecture—a self-modifying variant of the Titans architecture—was designed using Nested Learning principles. Hope utilizes unbounded levels of in-context learning and CMS blocks to scale to larger context windows, essentially optimizing its own memory through a self-referential process. Experiments demonstrated that Hope achieved lower perplexity and higher accuracy in language modeling and common-sense reasoning tasks compared to modern recurrent models and standard transformers.

Implementing Nested Learning: Deep Optimizers & Continuum Memory

Nested Learning proposes a new machine learning paradigm by viewing models as interconnected, multi-level optimization problems. This approach bridges the gap between a model’s architecture and its training algorithm, recognizing them as different “levels” of optimization. By acknowledging this inherent structure, Nested Learning aims to create more capable AI with deeper computational depth, ultimately addressing issues like catastrophic forgetting – where learning new information compromises retention of old knowledge.

The concept of “continuum memory systems” (CMS) is central to Nested Learning. Traditional Transformers utilize a short-term memory (sequence model) and a long-term memory (feedforward networks). CMS expands this by envisioning memory as a spectrum of modules, each updating at a specific frequency. This allows for a richer and more effective memory system suitable for continual learning, as different modules can prioritize and retain information at varying timescales, increasing overall learning capacity.

As a proof-of-concept, researchers developed “Hope,” a self-modifying architecture based on Nested Learning principles. Hope, a variant of the Titans architecture, leverages unbounded levels of in-context learning and incorporates CMS blocks to handle larger context windows. Experiments demonstrate Hope achieves lower perplexity and higher accuracy on language modeling and common-sense reasoning tasks compared to modern recurrent models and standard transformers, validating the power of this new paradigm.

Hope: A Self-Modifying Architecture & Experimental Results

Hope is a self-modifying recurrent architecture designed as a proof-of-concept for the Nested Learning paradigm. Built as a variant of the Titans architecture, it leverages unbounded levels of in-context learning and incorporates continuum memory system (CMS) blocks to handle larger context windows. Unlike standard Titans which have only two levels of parameter updates, Hope is designed to optimize its own memory through a self-referential process, essentially creating looped learning levels.

Experiments were conducted to validate Nested Learning, CMS, and Hope’s performance across language modeling, long-context reasoning, continual learning, and knowledge incorporation. The results, detailed in the researchers’ published paper, demonstrate that Hope achieves lower perplexity and higher accuracy compared to modern recurrent models and standard transformers on diverse language modeling and common-sense reasoning tasks.

The core innovation behind Hope lies in its ability to create a “continuum memory system” (CMS). This system views memory as a spectrum of modules, each updating at a different, specific frequency rate. This allows for a richer and more effective memory system for continual learning, going beyond the short-term/long-term division found in standard Transformers which separate sequence modeling and feedforward networks.

When it comes to continual learning and self-improvement, the human brain is the gold standard. It adapts through neuroplasticity – the remarkable capacity to change its structure in response to new experiences, memories, and learning.