Quantum machine learning represents a rapidly developing computational field that explores the potential of quantum processors to enhance and accelerate machine learning tasks. Su Yeon Chang from Los Alamos National Laboratory and M. Cerezo lead an effort to provide a comprehensive overview of this emerging discipline, clarifying its core principles and current limitations. This work surveys the diverse applications of quantum machine learning, ranging from optimisation and supervised learning to generative modelling, and critically assesses the evidence supporting claims of quantum advantage. By carefully examining the interplay between theoretical guarantees, practical implementations, and classical benchmarks, the authors offer a valuable guide to the quantum machine learning landscape, enabling researchers to evaluate the true potential of these approaches.

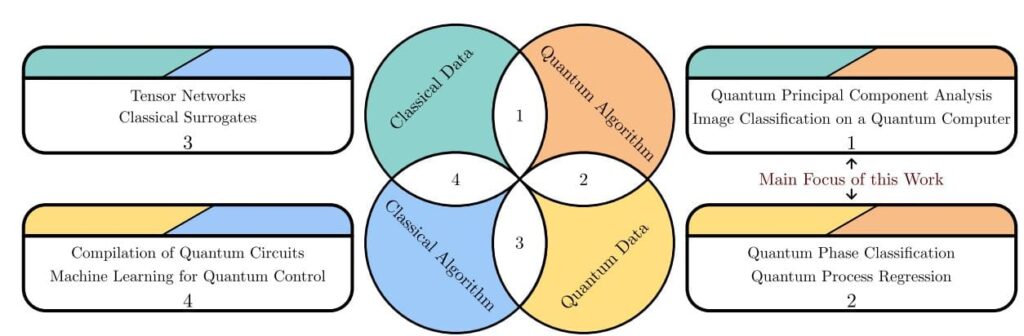

Researchers explore quantum machine learning (QML) to address optimisation, supervised, unsupervised and reinforcement learning, and generative modelling more efficiently than classical models. This work offers a high-level overview of QML, focusing on scenarios where the quantum device serves as the primary learning or data generating unit. The authors outline the field’s tensions between practical implementation and theoretical guarantees, different access models and potential speedups, and comparisons with classical baselines and claimed quantum advantages. They flag areas where evidence supporting quantum advantages is strong, conditional, or currently lacking, and identify remaining open questions. By clarifying these nuances and debates, the authors aim to provide a comprehensive overview of the QML landscape, enabling readers to assess the potential of this emerging field.

Neural Networks and Tensor Network Methods

Several research areas converge within quantum machine learning, quantum sensing, and quantum control. Neural network quantum states represent quantum many-body wavefunctions using neural networks, offering a potential advantage for simulating complex quantum systems. Tensor networks efficiently represent high-dimensional quantum states and, when combined with machine learning, enhance their capabilities for analyzing and simulating quantum systems. Researchers are applying machine learning to identify and characterize quantum phase transitions in physical systems, a key application in condensed matter physics.

A major theme involves using machine learning, often reinforcement learning, to optimize quantum circuits, reducing gate count, minimizing errors, and improving performance, crucial for near-term quantum computers. Quantum sensing fundamentals underpin the use of quantum systems, like NV centers in diamond, to make highly precise measurements of physical quantities. Quantum metrology and Fisher information focus on enhancing measurement precision beyond classical limits using quantum entanglement. Machine learning is increasingly applied to quantum sensing to improve signal processing, optimize sensing protocols, enhance sensitivity, and learn optimal sensing parameters.

Beyond circuit compilation, quantum error correction with reinforcement learning aims to optimize QEC codes and decoding strategies. Variational quantum algorithms control and optimize quantum systems, offering a versatile approach to quantum control. A key trend involves using machine learning to improve the performance of quantum devices and experiments. Variational quantum algorithms are central to many areas, using parameterized quantum circuits and classical optimizers for solving quantum many-body problems, optimizing quantum sensing, and controlling quantum systems. Reinforcement learning is well-suited for problems where the optimal strategy is unknown, finding applications in quantum circuit optimization, quantum error correction, and optimizing quantum sensing protocols. Combining QML, quantum sensing, and quantum control creates a powerful feedback loop: quantum sensing gathers data, machine learning analyzes it and learns how to control the system, and that control improves the sensor’s performance. The research landscape is shifting towards integrating machine learning into the entire quantum technology stack, enhancing and optimizing all aspects of quantum computing and sensing.

PAC Learning Framework Defines Quantum Advantage

This work investigates quantum machine learning, a computational paradigm leveraging quantum resources to enhance machine learning tasks. Researchers define a framework for understanding QML, focusing on scenarios where the quantum device actively generates or processes data. The study meticulously examines the interplay between practical implementation and theoretical guarantees, data access methods, and the potential speedups offered by quantum approaches compared to classical algorithms. The core of this research lies in establishing a formal understanding of learning within the QML context. Scientists utilize the Probably Approximately Correct (PAC) learning framework to define an (ε, δ)-PAC learner, an algorithm that, given sufficient data, produces a hypothesis with a quantifiable level of accuracy.

Specifically, the team demonstrates that such a learner must achieve a generalization error of no more than the optimal error plus a small value ε, with a probability of at least 1 − δ. This establishes a rigorous mathematical foundation for evaluating the performance of QML algorithms. Researchers define the empirical loss, calculated from training data, and the generalization error, which measures performance on unseen data. The team introduces the generalization gap, the difference between these two errors, as a critical metric for assessing how well a model will perform beyond its training set. This work provides a foundational framework for analyzing and developing effective QML algorithms, paving the way for future advancements in the field.

Simpler Quantum Pipelines For Practical Learning

Quantum machine learning currently stands at a pivotal moment, with its potential for practical applications remaining an active area of debate and investigation. While formal distinctions between quantum and classical learning models have been demonstrated, these often rely on specific theoretical conditions and have not yet translated into broadly applicable algorithms for everyday problems. Current approaches attempting to bridge this gap face significant challenges, stemming from attempts to directly adapt established quantum algorithms or to replicate classical deep learning techniques on quantum hardware. This review highlights a crucial insight: future progress may depend on developing simpler, more transparent quantum pipelines with clearly defined assumptions and data access methods, allowing for rigorous comparison against strong classical benchmarks.

However, the field demonstrates considerable promise when applied to inherently quantum data, where it has already improved methods for learning state properties, designing measurements, compressing information, and integrating experimental results with inference processes. Successes in this area, leveraging the principles of quantum mechanics, suggest a natural path forward for quantum-native generative modeling, focusing on state preparation, sampling, and learning through measurement. Researchers acknowledge that continued progress requires a cautious approach, avoiding overstatement of near-term benefits and maintaining precision regarding the specific advantages offered by quantum sampling structures and physical priors. The ongoing development of quantum machine learning remains a dynamic and rapidly evolving field, with future advancements likely arising from a deeper understanding of the interplay between data models, encodings, and experimental design.